🧠 Second Brain

Search

Orchestration

Delving deeper, after selecting technology from the myriad available, you’ll inevitably confront the need to manage intermediate levels. This is particularly true when handling unstructured data, which necessitates transformation into a structured format. Orchestrators and cloud-computing frameworks play a crucial role in this process, ensuring efficient data manipulation across different systems and formats. In the following chapters, I’ll elucidate their role in completing the full architectural picture.

At their core, orchestrators:

- Trigger computations at the appropriate moments

- Model dependencies between computations

- Track the execution history of computations

Orchestrators excel in:

- Timing of events

- Identifying and addressing errors

- Restoring correct states

While traditional orchestrators are task-centric, newer ones like Dagster emphasize Data Assets ^gvdd3tf and Software-Defined Asset. This approach enhances scheduling and orchestration, as discussed in Dagster. These advancements align with the Modern Data Stack concepts.

# What is an Orchestrator

For a detailed understanding, refer to What is an Orchestrator.

# The Role of Orchestration in Mastering Complexity

Explore the key features in RW Building Better Analytics Pipelines.

# Abstraction: Data Pipeline as Microservice

Abstractions let you use data pipelines as a microservice on steroids. Why? Because microservices are excellent in scaling but not as good in aligning among different code services. A modern data orchestrator has everything handled around the above reusable abstractions. You can see each task or microservice as a single pipeline with its sole purpose-everything defined in a functional data engineering way. You do not need to start from zero when you start a new microservice or pipeline in the orchestration case.

More on Data Orchestration Trends: The Shift From Data Pipelines to Data Products.

# Tools

- Apache Airflow (originated at Airbnb)

- Luigi (developed by Spotify)

- Azkaban (created at LinkedIn)

- Apache Oozie (suitable for Hadoop systems)

- Dagster

- Prefect

- Temporal

- Mage AI

- orchest.io (integrates with Notebooks)

- Kestra

- Nomad Orchestrator

- Flyte

Selecting a technology should be followed by choosing an Orchestrator. This crucial step often goes overlooked.

For more insights, read Data Orchestration Trends: The Shift From Data Pipelines to Data Products.

# History: Evolution of Tools

Orchestrators have evolved from simple task managers to complex systems integrating with the Modern Data Stack. Let’s trace their journey:

- 1987: The inception with (Vixie) cron

- 2000: The emergence of graphical ETL tools like Oracle Warehouse Builder (OWB), SSIS, Informatica

- 2011: The rise of Hadoop orchestrators like Luigi, Oozie, Azkaban

- 2014: The rise of simple orchestrators like Airflow

- 2019: The advent of modern orchestrators with Python like Prefect, Kedro Dagster, Temporal or even fully SQL framework dbt

- To declarative pipelines fully managed into Ascend.io, Palantir Foundry and other data lake solutions

For an exhaustive list, visit the Awesome Pipeline List on GitHub. More on the history on Bash-Script vs. Stored Procedure vs. Traditional ETL Tools vs. Python-Script - 📖 Data Engineering Design Patterns (DEDP).

Also check

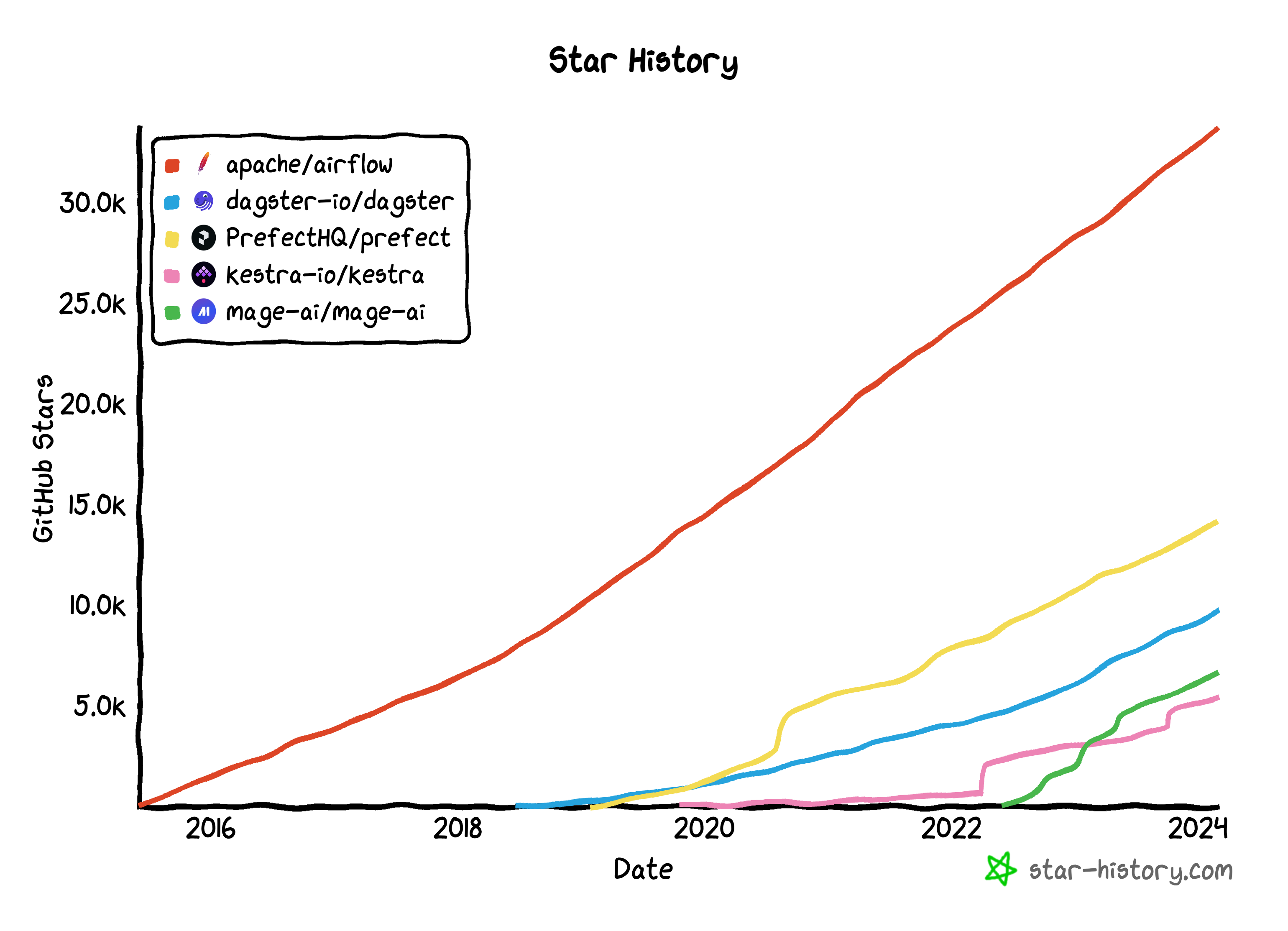

GitHub Star History, eventough they don’t tell you much.

# When to Use Which Tools

As of 2022-09-21:

- Airflow when you need task scheduling only (no data awareness)

- Dagster when you foresee higher-level data engineering problems. Dagster has more abstractions as they grew from first principles with a holistic view in mind from the very beginning. They focus heavily on data integrity, testing, idempotency, data assets, etc.

- Prefect if you need a fast and dynamic modern orchestration with a straightforward way to scale out. They recently revamped the prefect core as

Prefect 2.0 with a new second-generation orchestration engine called

Orion. It has several abstractions that make it a Swiss army knife for general task management.

- With the new engine Orion they built in Prefect 2.0, they’re very similar to Temporal and support fast low latency application orchestration

Or said others in this Tweet - I’d use:

- Airflow for plain task-scheduling

- Prefect fast, low-latency imperative scheduling

- Dagster for data-aware pipelines when you want best-in-class, but opinionated support

Also, explore insights from the podcast Re-Bundling The Data Stack With Data Orchestration And Software Defined Assets Using Dagster | Data Engineering Podcast with Nick Schrock.

# What language does an Orchestrator speak?

What language does an Orchestrator speak

Origin:

References: Python What is an Orchestrator Why you need an Orchestrator Apache Airflow

Created: